Digitizing Occasion Maps

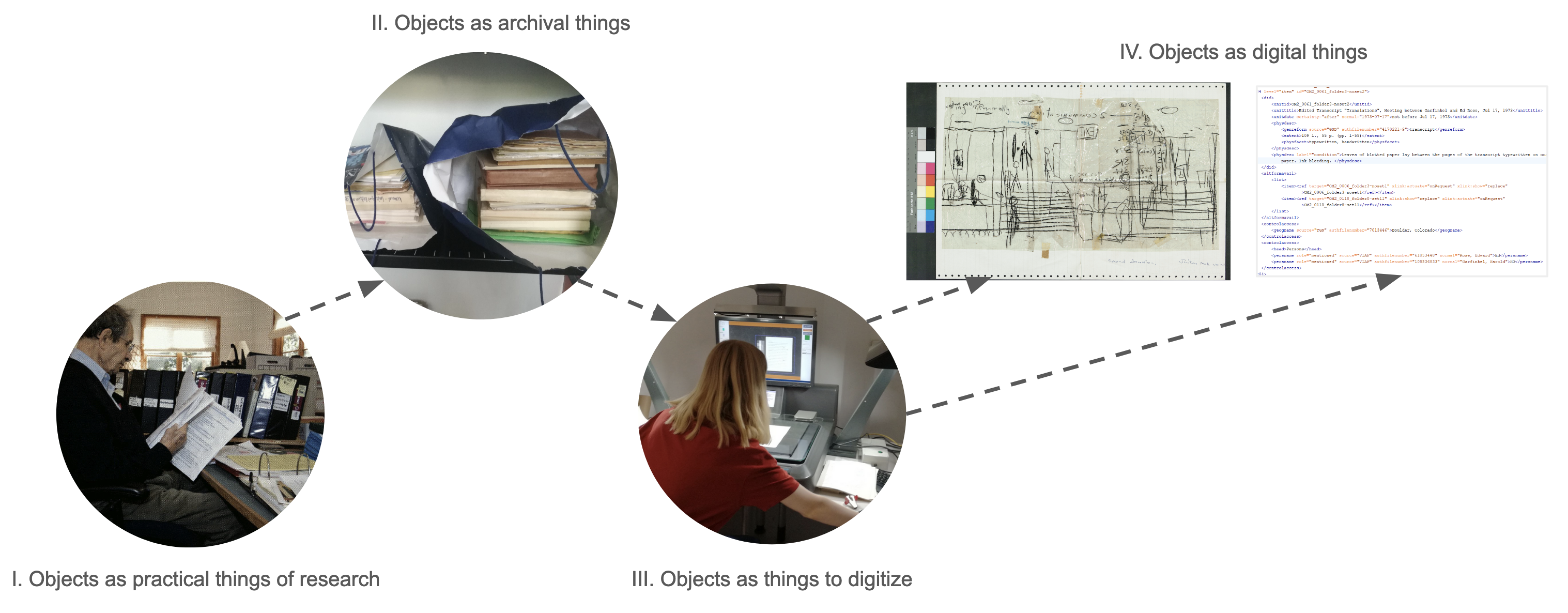

The Sub-Collection Occasion Map Papers arrived in three stacks to the offices of Cologne Center for eHumanities. With a first glance, it can be observed that the stacks have a nested structure, hinting a hierarchical order of things. Although this is a common way of storing and organizing analog documents with its own internal logic, it can produce some troubles as the documents carried over from personal usage to archival logic.

One specific trouble is defined as a starting point of the digitization of Occasion Map Papers: object constitution.

Memory institutions collect, preserve, organize, exhibit and grand access to culturally, informatively and historically significant objects from heterogeneous domains, because these objects act as proxies for “epistemic things” of real word phenomenons. As a result of these processes, objects become things of knowledge. These systematic indexing of reality through the organization of the objects can be seen as a process of intellectual measurement, which in turn is a product of specific, context-dependent grammar.

While organizational guidelines are usually deployed, the measurement itself carried out by ad hoc decisions, most importantly the decisions regarding to definition of what can be considered as a unit. Folders, envelopes, boxes creates physical grouping of things, which can be organized and understood categorically, semantically, culturally and practically differing ways. Additionally one object can have multiple practical states (i.e. a foldable map), which can be perceived and recorded as a whole or as separate entities.

Therefore, this form of “measurement” can not claim a status of objectivity, and it can not fully exhaust the significance of the objects. Especially, the loss of context by separation from the initial cultural practices (being practical objects of ethnomethodological studies) and modes of circulation (serving as a evidence or product of research results) cannot be counteracted solely through terminological or functional categorization of archival practices.

It is observed that this trouble deepens through digitization, because the physical materiality, the last carrier of the traces of practical meaning, gets lost in the process of demoting the objects to their common denominator. This practice of digitization is common and tries to suggest a objectivity and efficiency. Suggestion made accountable through digitization guidelines with great detail, which de facto tries to eliminate the unavoidable need for ad hoc decisions.

Creating Images

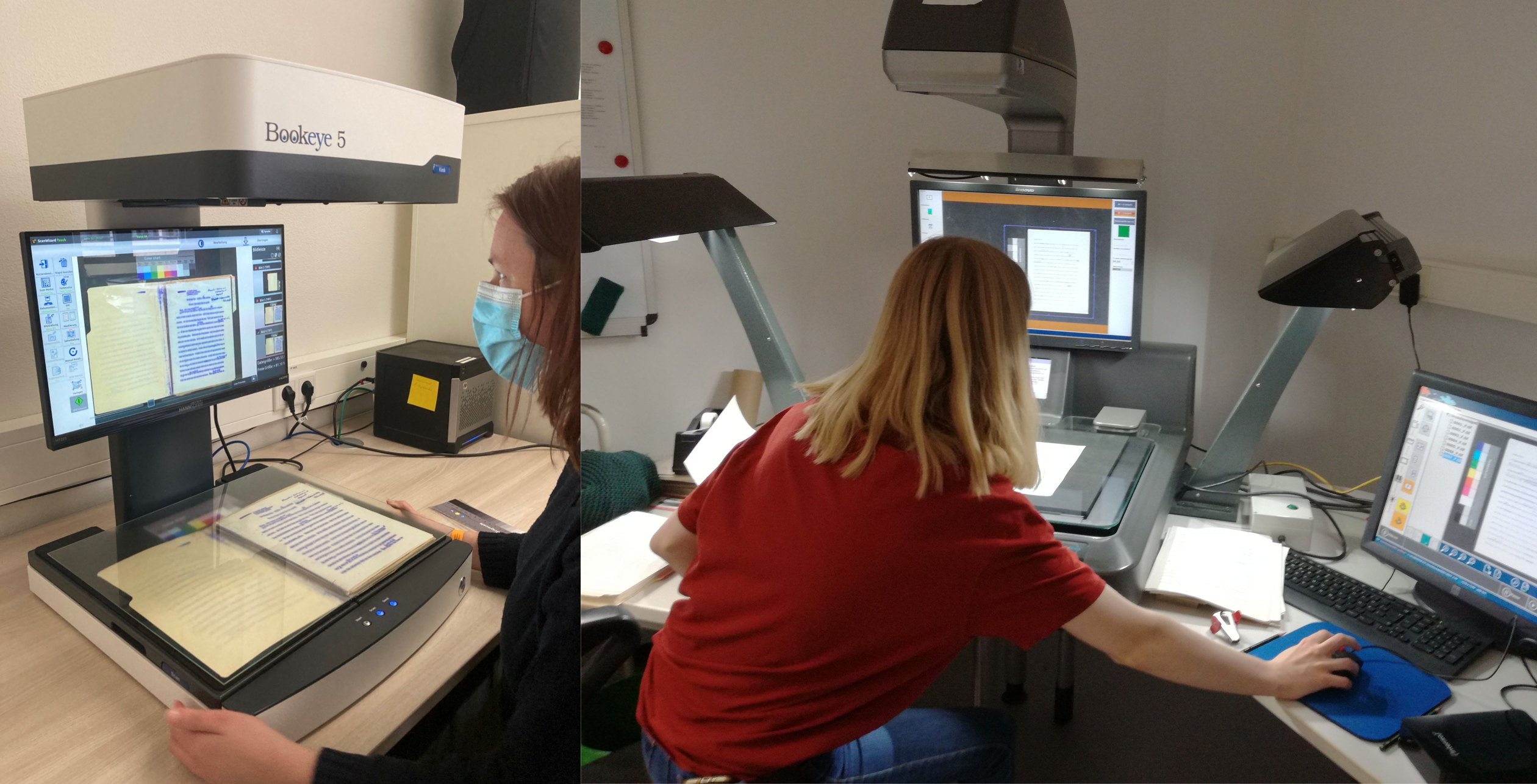

Digitization process of Occasion Map Papers are carried out as a lived-work of executing archival practices and counteract the urge of relaying on best-practices as a mean of making supposed objectivity accountable. With that two differing practices executed to observe the particularities: buttom-up and top-to-buttom

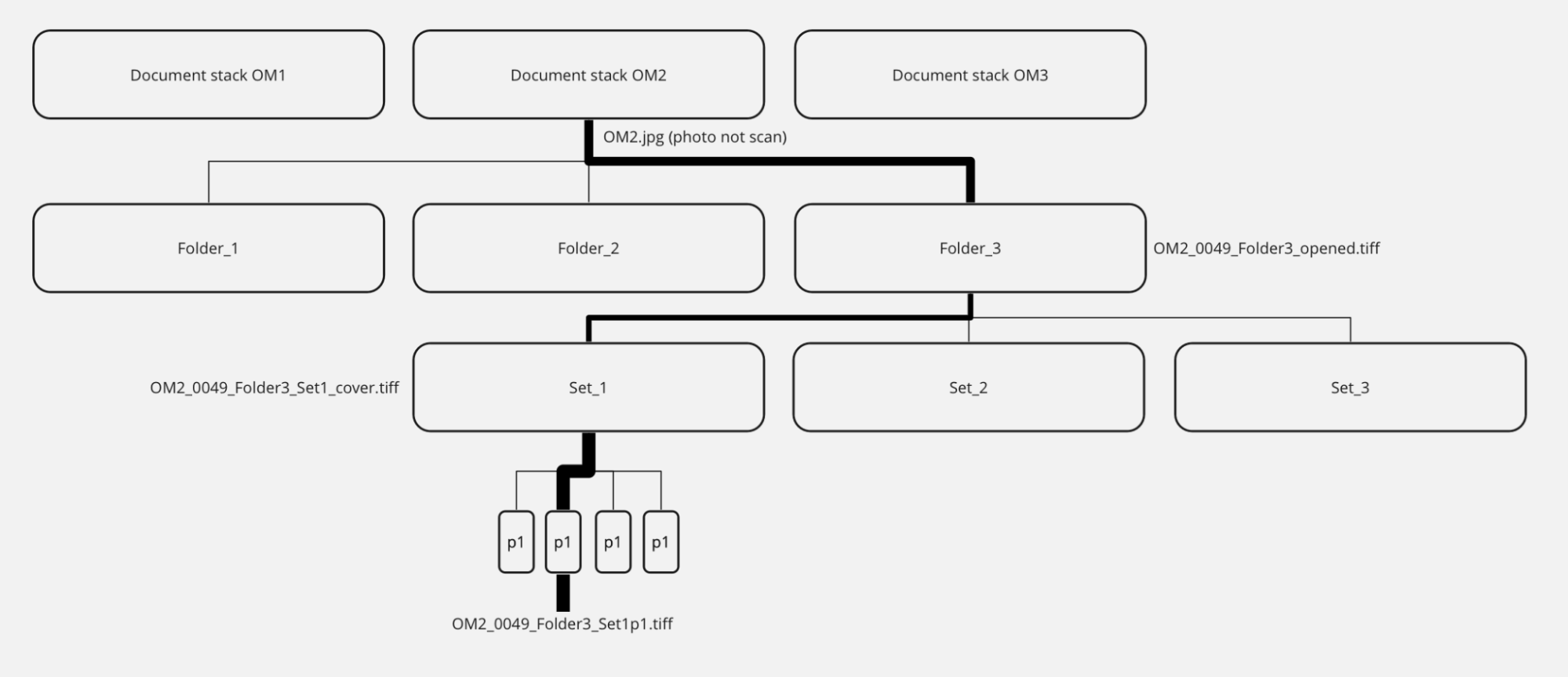

On the one hand digitization is utilized with the buttom-up principle, i.e. the defined units being the scannable single objects accompanied by a sheet in which the observable informations recorded. Here was the “as-is” approach central and no assumptions about semantic togetherness has been made. The structure and hierarchical order of the documents are taken naively without imposing any conventions of what constituted an archival unit. Every state of the same object is taken into account as singular units, meaning that the sheet had a separate row for every scan, containing information of location in the staple, physical particularities and anomalies.

Every sheet of paper constitutes its own material object and any materially distinct grouping of objects (a set of paper stapled together, a folder of documents, an envelope) constitutes another object which should be treated as such. This consequently meant that the top most level were the three bags stacks of folders which were photographed as items on their own, despite their divisions into three stacks seemingly being arbitrary and not related to their content. The guiding question through digitization was “Within the technological limitations, how can we digitize the material in a way that does not detach and decontextualize the scanned images from their material and practical context?” Therefore a folder should be photographed before taking any documents out of it, a stapled document photographed before staples are removed etc. Each layer of nesting should be recorded as metadata and in the object signature.

Bottom-Up Metadata

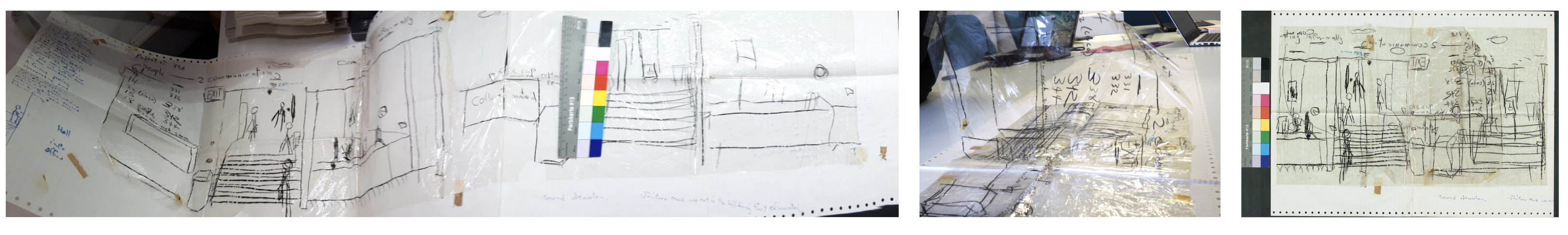

Complex cases which could not be described in its entirety by this system were discussed and adaptations to the vocabulary and logic of the metadata were made. In the course of the project, the basic descriptive elements were augmented by the keywords “parts” and “states”. “Parts” allow for oversized objects to be scanned in multiple images and trace their relation to one physical object. “States” was used for objects that could be manipulated, like a foldable pamphlet or sheets of paper that were glued together and which could not be separated non-destructively. Using states also allows for some traces of materiality and interactivity to be retained in the digital object (unfolding a pamphlet that was digitized in multiple folded states).

[bild von einem complex case]

Additionally, a free tagging system was used to record document features like “printed”,”handwritten”,”typed copy”,”annotations”, “map”. These tags were not standardized, nor did we create a fixed typology, during scanning tags were decided upon freely by the person scanning. Only at a later stage after a significant amount of material had been digitized, the tags were compared and opportunities for normalization discussed. This was a result of the guiding question to digitize naively and without a predetermined archival view. Tags were not used to file objects into an existing typology, but to convey those features of the object that the person handling the object deems noteworthy or significant.

On the other hand top-to-buttom principle is utilized to describe the togetherness independently from scanning activities which was guided by archival best-practices using Encoded Archival Description to create a finding aid [hyperlink to EAD seite von uns?]. Particularities about this approach can be found on digital occasion map under Finding Aid EAD.

Institutional Digitization

The Harold Garfinkel Archive executed a traditional digitization campaign almost synchronously to the activities in Germany. With that an interesting opportunity for a comparative study occurred. The archive worked with one of the largest commercial digitization service providers in the US, IDI (Innovative Document Imaging) which has more than 20 years of experience in digitizing documents for businesses, government agencies, libraries, and archives. At this scale it is not surprising that the IDI relies on formal best-practices and guidelines, but with a closer inspection it is admittedly and clearly the ad hoc decisions which constitutes the objects at the end.

The campaign started on-site at the Harold Garfinkel Archive in close collaboration with the director, Anne Rawls. This phase is defined as becoming accustomed to the material and the demands of the researchers, and it is essential to the way IDI deals with any digitization project.

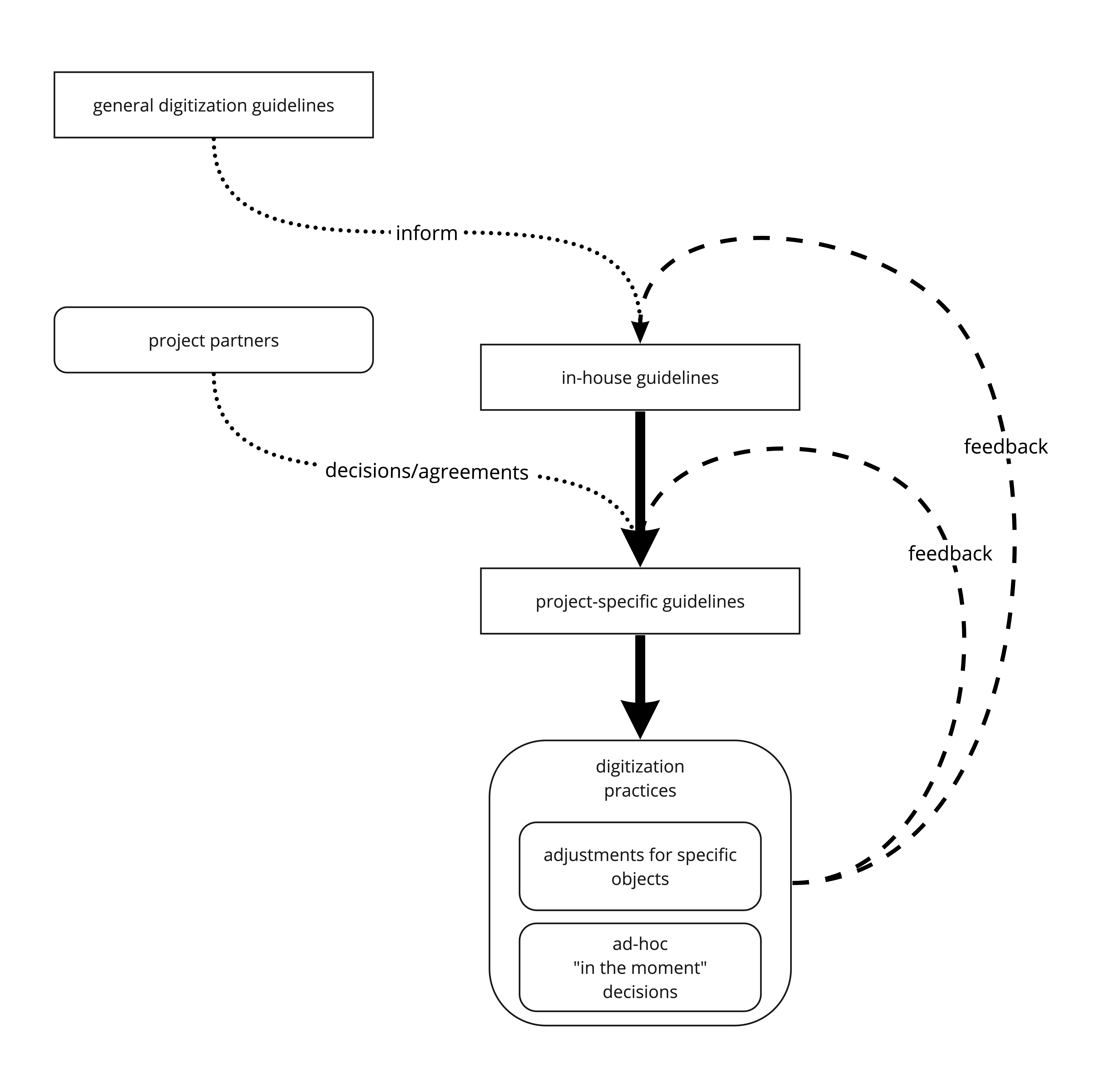

From the start, a process of making specific decisions accountable occurs. IDI utilizes “in-house guidelines”, which comprise a condensed form of established general guidelines, especially The Technical Guidelines for Digitizing Cultural Heritage Materials: Creation of Raster Image Files from FADGI (Federal Agencies Digitization Guidelines Initiative), and the experience of lived work over the years in the field. These guidelines does not accompany the process of specific digitization campaign directly, they have the function of informing the “project specific guidelines” in combination with the decisions and agreements with the project partners, therefore making project specific decisions accountable. The phase of becoming accustomed to the material helps here to determine these specific guidelines, which will be what the scanning operators will follow.

Although through continuous feedback loops these project specific guidelines adjusted, it is unavoidable to rely on ad hoc decisions for making heterogenous objects processable. On request of the Harold Garfinkel Archive and in a nod to the ethnomethodological context, IDI has produced a short video, documenting the in-situ digitization practices and interactions between supervisor and scanning operator:

[A] should I capture this this way or should I remove the documents first?

[B] hm ... I would say, turn it around and capture the folder as is with the title facing out first”

These quotes show that even though multiple layers of formal guidelines and best-practices exist, the actual practices are simultaneously significantly driven by subjective ad hoc decisions. On the one hand, the technological parameters are standardized and aimed at consistency: the equipment at a service provider like IDI is industry standard and when possible the same camera system with consistent settings is used throughout a project. On the other hand, questions on what constitutes an object to be scanned, what aspects of that object are of importance and how those are digitized within the technological limitations is not self-evident and has to be determined by the supervisor and operator in-situ.

Descriptive metadata is generated during the scanning process using a proprietary tracking system that records the title, number of scans, and possible "anomalies" for each object. This leads to the question of at what level of granularity this metadata is collected, meaning what constitutes an object. At first glance, objects appear to be folders and subfolders, which are given a title, an index number, and the number of pages scanned. No metadata is recorded for individual leaves within such a folder. The "title" metadata field represents either the given title of an object, if it had one, or a short descriptive title created by the operators. Two arbitrary examples are:

- 15. Wittgenstein (29 pages)

- 118. Two ring binder brown - Exam booklet, questions, templates and answers... (hand writing with damaged pages) - Seminar, class papers and notes as well (190 pages)

The former object is defined purely by its content with no descriptive information about the type of document. The latter object is defined purely by a lengthy descriptive note about its type and material condition. Some further descriptive information is recorded in the optional metadata field "anomalies.”

- “Lots of loose papers and documents stapled and paper clipped; quality is poor on some pages; best possible shots”

- “Pages creased with some loss of print (...) many foldouts with some bound into book so scanning limited”

These comments shows that although careful formal steps taken some objects prove to be not fully processable. A verbal explanation needed to counteract the deficit in some regard. As Jordan Barron, Sales Manager of IDI states:

“We have the responsibility to handle the material in person physically. The researcher later doesn’t have that. So to give them the best possible perspective, the most relevant information, that is what we are trying to do”

At the end, what is observed here is the tension between formal processes, which are developed to digitize as much material as possible in an economically viable and consistent way, and at the same time, the necessity of accurately representing the objects being digitized. The alleged firmness of guidelines creates the illusion of security and objectivity with the object's constitution. However, the definition of objects actually relies heavily on ad hoc decisions such as granularity, document groupings, and descriptions.

In contrast, the digitization of Occasion Maps Papers relied on treating each scan as an individual object, noting the given material condition as a grouping denominator. With this bottom-up approach, there was more flexibility for ad hoc decisions during the scanning process. The results of the digitization informed the creation of a finding aid, which executed a top-down process, taking every page into account and creating groupings in a more epistemic manner. The results of both approaches were then brought together in a content management system called Omeka S, which is based on techniques used in Linked Open Data paradigms. To achieve this, a data model was created that can carry both approaches and put them in a meaningful relation. Particularities can be found on digital occasion map under Finding Aid and Omeka S.